Manticore Search since 6.3.0 supports Vector Search! Let’s learn more about it – what it is, what benefits it brings, and how to use it on the example of integrating it into the GitHub issue search demo .

Full-text search and Vector search

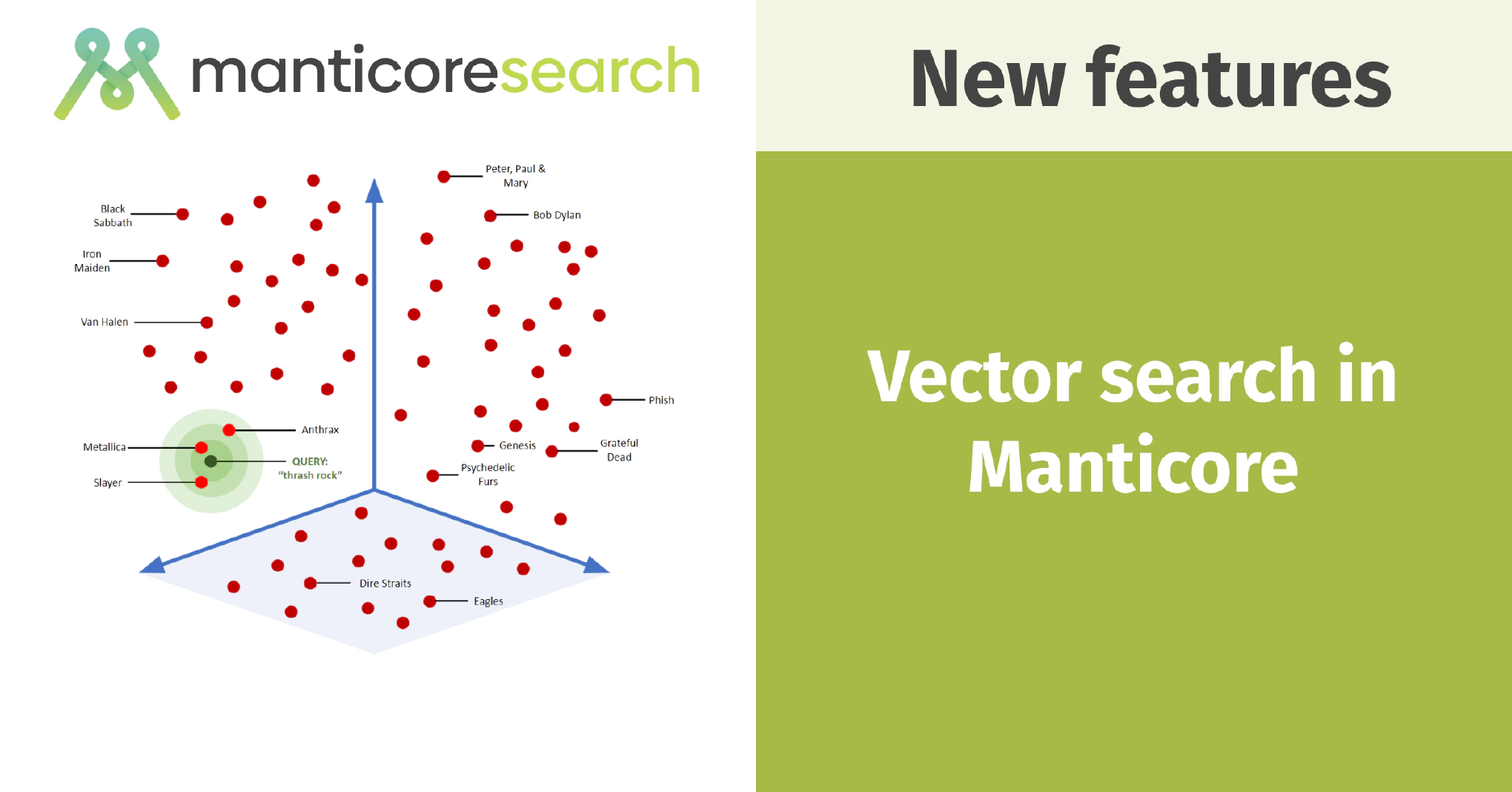

Full-text search is useful because it allows for efficient searching by finding exact matches of keywords. However, it mainly relies on keywords, which can sometimes be limiting. In contrast, semantic search uses vector similarity and machine learning to understand the meaning behind your search and finds documents similar to what you’re looking for. This method often leads to better results and allows for searches in a more natural and relaxed style.

We are pleased to introduce this powerful feature in Manticore Search, implemented as a part of the Columnar library . Let’s explore how to get started with it.

Get started with Vector Search and Manticore

A quick introduction to vector search: To perform vector search, text must be converted into vectors, which are typically ordered lists of floating-point numbers. You do the same with your query. Then, compare the vector of your query with the vectors of your documents and sort them by closeness. Read our other article about Vector search in old and modern databases .

We’ll discuss how to turn text into vectors shortly, but first, let’s explore how to work with vectors in Manticore Search.

Installation

Vector search functionality has been available in Manticore since version 6.3.0. Make sure Manticore is installed with the Columnar library . If you need help with installation, check the documentation .

Creating a table

First, we need to create a real-time table that will contain our schema declaration. This includes a field for storing vectors, allowing us to query them using the specified syntax. Let’s create a simple table. The table should have a field of type float_vector with configured options.

create table test ( title text, image_vector float_vector knn_type='hnsw' knn_dims='4' hnsw_similarity='l2' );

You can read detailed docs here .

Inserting data

Once the table is set up, we need to populate it with some data before we can retrieve any information from it.

insert into test values ( 1, 'yellow bag', (0.653448,0.192478,0.017971,0.339821) ), ( 2, 'white bag', (-0.148894,0.748278,0.091892,-0.095406) );

Manticore Search supports SQL, making it user-friendly for those familiar with SQL databases. However, if you prefer to use plain HTTP requests, that option is available too! Here’s how you can perform the earlier SQL operation using HTTP:

POST /insert

{

"index":"test_vec",

"id":1,

"doc": { "title" : "yellow bag", "image_vector" : [0.653448,0.192478,0.017971,0.339821] }

}

POST /insert

{

"index":"test_vec",

"id":2,

"doc": { "title" : "white bag", "image_vector" : [-0.148894,0.748278,0.091892,-0.095406] }

}

Querying the data

The final step involves querying the results using our pre-calculated request vector, similar to the document vectors prepared with AI models. We will explain this process in more detail in the next part of our article. For now, here is an example of how to query data from a table using our ready vector:

mysql> select id, knn_dist() from test where knn ( image_vector, 5, (0.286569,-0.031816,0.066684,0.032926), 2000 );

You’ll get this:

+------+------------+

| id | knn_dist() |

+------+------------+

| 1 | 0.28146550 |

| 2 | 0.81527930 |

+------+------------+

2 rows in set (0.00 sec)

That is, 2 documents sorted by the closeness of their vectors to the query vector.

Manticore Vector search in supported clients

Manticore Search offers clients for various languages, all supporting data querying using Vector Search. For instance, here’s how to perform this with the PHP client :

<?php

use Manticoresearch\Client;

use Manticoresearch\Search;

use Manticoresearch\Query\KnnQuery;

// Create the Client first

$params = [

'host' => '127.0.0.1',

'port' => 9308,

];

$client = new Client($params);

// Create Search object and set index to query

$search = new Search($client);

$search->setIndex('test');

// Query now

$results = $search->knn('image_vector', [0.286569,-0.031816,0.066684,0.032926], 5);

Easy, right? If you want to know more about various options, you can check the PHP client docs .

How to Convert Text to Vectors?

Let’s now discuss how we can get a vector from text.

An “embedding” is a vector that represents text and captures its semantic meaning. Currently, Manticore Search does not automatically create embeddings. However, we are working on adding this feature and have an open ticket on GitHub for it. Until this feature is available, you will need to prepare embeddings externally. Therefore, we need a method to convert text into a list of floats to store and later query using vector search.

You can use various solutions for converting texts to embeddings. We can recommend using sentence transformers from Hugging Face , which include pre-trained models that convert text into high-dimensional vectors. These models capture the semantic similarities between texts effectively.

The process of converting text to vectors using sentence transformers includes these steps:

- Load the pre-trained model: Begin by loading the desired sentence transformer model from the Hugging Face repository. These models, trained on extensive text data, can effectively grasp the semantic relationships between words and sentences.

- Tokenize and encode text: Use the model to break the text into tokens (words or subwords) and convert these tokens into numerical representations.

- Compute vector representations: The model calculates vector representations for the text. These vectors are usually high-dimensional (e.g., 768 dimensions) and numerically represent the semantic meaning of the text.

- Store vectors in the database: Once you have the vector representations, store them in Manticore along with the corresponding text.

- Query: To find texts similar to a query, convert the query text into a vector using the same model. Use Manticore Vector Search to locate the nearest vectors (and their associated texts) in the database.

Semantic Search in the GitHub Demo

In a previous article , we built a demo showcasing the usage of Manticore Search in a real-world example: GitHub issue search . It worked well and already provided benefits over GitHub’s native search. With the latest update to Manticore Search, which includes vector search, we thought it would be interesting to add semantic search capability to our demo. Let’s explore how it was implemented.

Although most libraries that generate embeddings are written in Python, we’re using PHP in our demo. To integrate vector search into our demo application, we had two options:

- An API server (which can utilize Python but might be slower)

- A PHP extension, which we have chosen to implement.

While looking into how to create text embeddings quickly and directly, we discovered a few helpful tools that allowed us to achieve our goal. Consequently, we created an easy-to-use PHP extension that can generate text embeddings. This extension lets you pick any model from Sentence Transformers on HuggingFace. It is built on the CandleML framework, which is written in Rust and is a part of the well-known HuggingFace ecosystem. The PHP extension itself is also crafted in Rust using the php-ext-rs library. This approach ensures the extension runs fast while still being easy to develop.

Connecting all the different parts was challenging, but as a result, we have this extension that easily turns text into vectors directly within PHP code, simplifying the process significantly.

<?php

use Manticore\\Ext\\Model;

// One from <https://huggingface.co/sentence-transformers>

$model = Model::create("sentence-transformers/all-MiniLM-L12-v2");

var_dump($model->predict("Hello world"));

The great advantage is that the model is remarkably fast at generating embeddings, and it only needs to be loaded once. All subsequent calls are executed in just a few milliseconds, providing good performance for PHP and sufficient speed for the demo project. Consequently, we decided to proceed with this approach. You can check and use our extension on GitHub here .

The challenging part is done; next, we need to integrate it into the Demo project with minimal code changes.

Integration into existing code

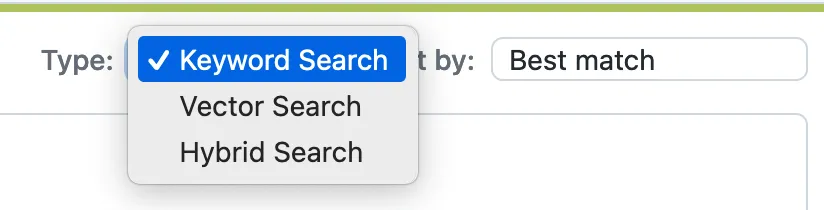

To integrate vector search capability, we added a small switch to the header that allows users to change the search mode. By default, we use keyword search (also known as “full-text search”). It looks like this:

The changes on the backend side were not hard. We added a call to the text-to-vector conversion extension mentioned above. That’s what the code block looks like:

// Add vector search embeddings if we have a query

if ($search_query) {

if ($search !== 'keyword-search') {

$embeddings = result(TextEmbeddings::get($query));

$filters['embeddings'] = $embeddings;

}

if ($search === 'vector-search') {

$filters['vector_search_only'] = true;

}

}

The Hybrid Search, which combines semantic search and keyword search, can be implemented in various ways. In this case, we simply added a full-text filter to the vector search results. You can see the updated code here . Here is how it looks:

protected static function getSearch(string $table, string $query, array $filters): Search {

$client = static::client();

$Index = $client->index($table);

$vector_search_only = $filters['vector_search_only'] ?? false;

$query = $vector_search_only ? '' : $query;

$Query = new BoolQuery();

if (isset($filters['embeddings'])) {

$Query = new KnnQuery('embeddings', $filters['embeddings'], 1000);

}

if ($query) {

$QueryString = new QueryString($query);

$Query->must($QueryString);

}

return $Index->search($Query);

}

As we can see, integrating semantic search into the demo was straightforward. Next, let’s see what we achieve with Vector Search.

Comparing what we got in our Demo with Semantic Search

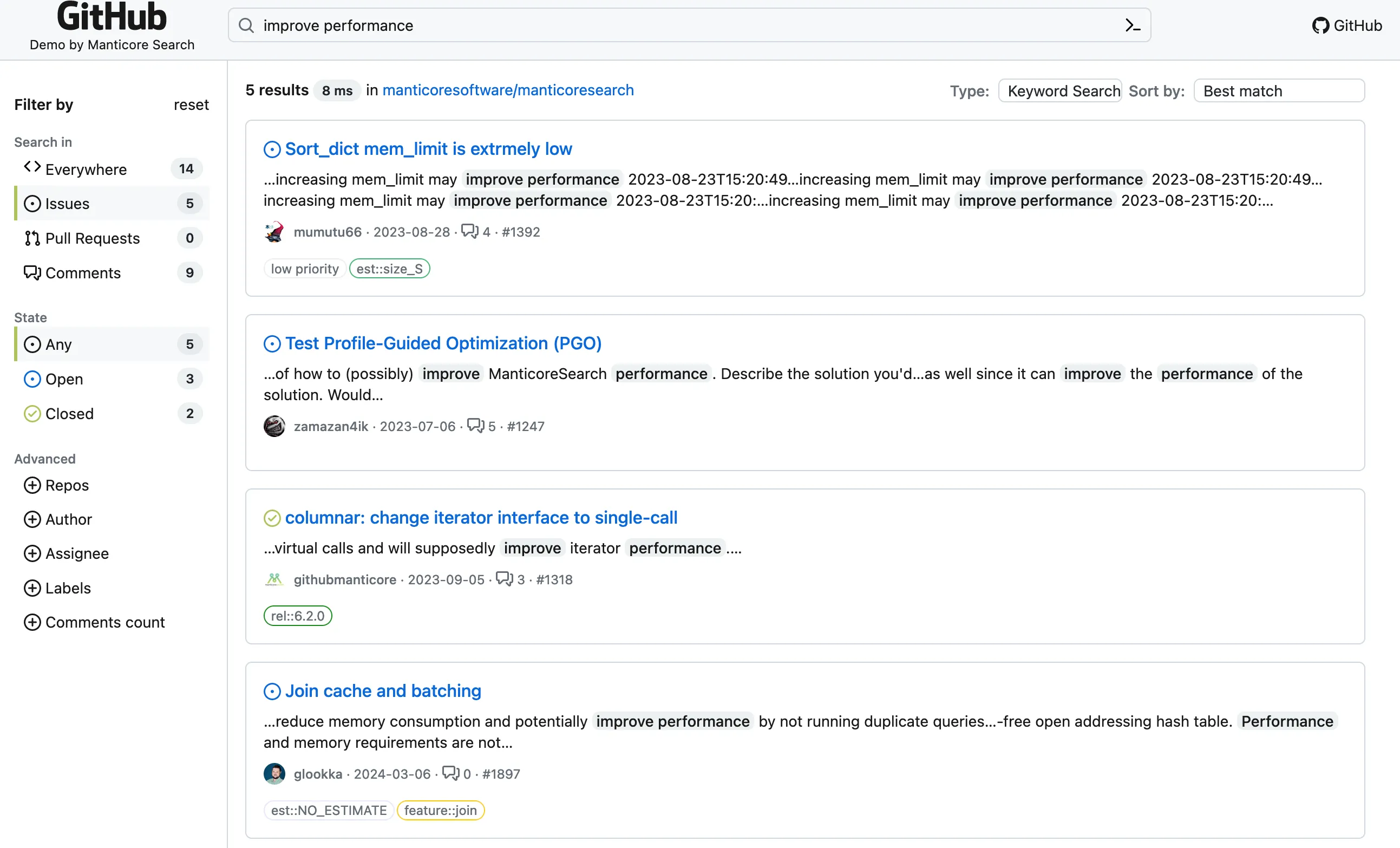

Let’s take a look and compare some simple result made with default Keyword Search versus Semantic Search in our Demo page .

For example, doing a keyword search for "improve performance" might give results like this:

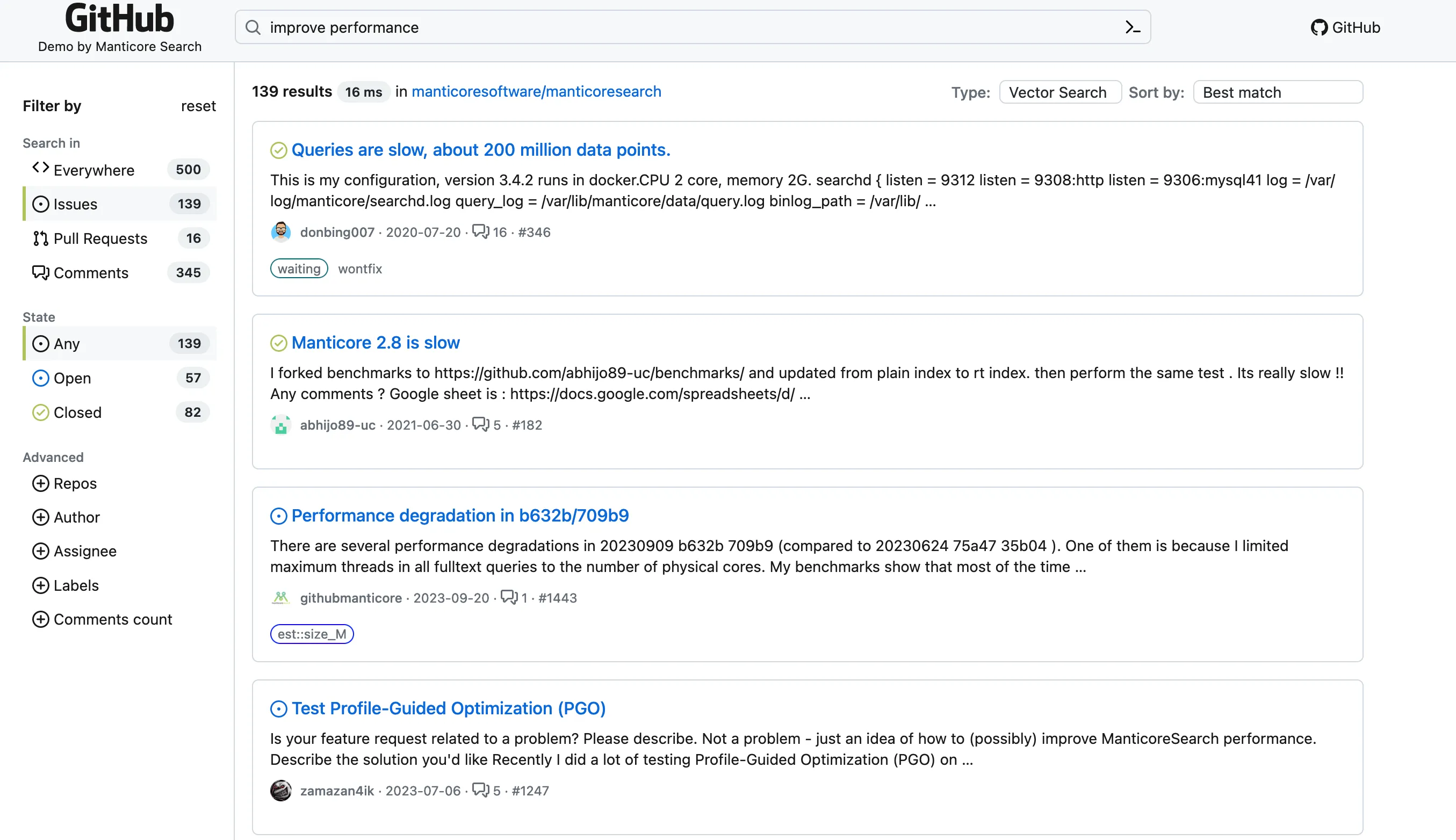

That looks legitimate and fair enough. But it’s looking ONLY for an exact match of the original phrase. Let’s see and compare it to a semantic search instead:

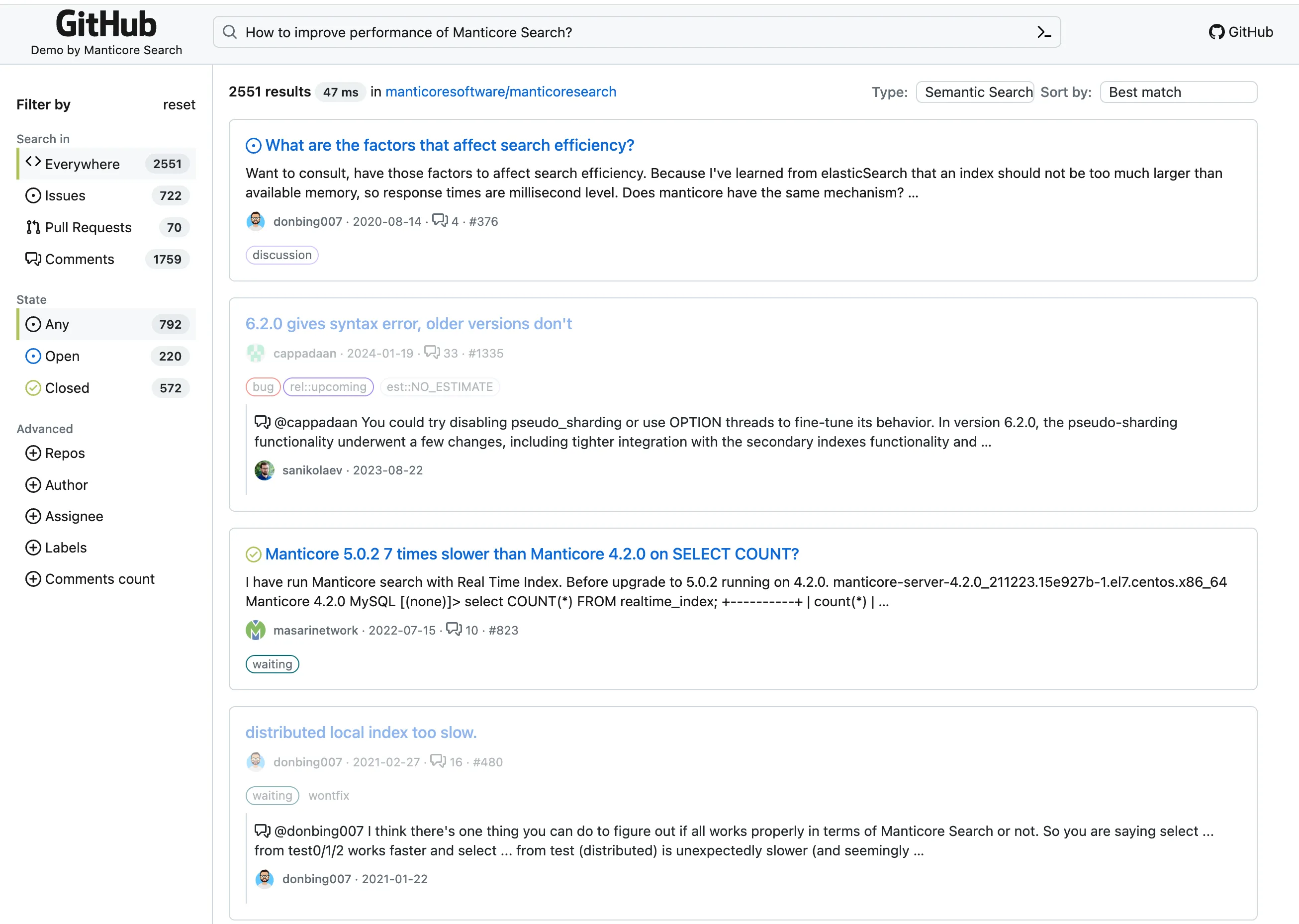

That looks somewhat flexible, though not perfect. However, we can also ask a question like How to improve performance of Manticore Search? and get additional relevant results.

Here, you can see that thanks to vector search and the machine learning model behind it, the system can precisely understand what we’re looking for and provide relevant results. However, if you attempt the same search in keyword mode, you won’t get any results.

Conclusion

We’re excited about Manticore’s new capabilities! Now, with vector search and help from some external tools, it’s easy to set up semantic search. Semantic search is a smart way to understand queries better. Instead of just looking for exact words, it figures out the meaning behind what people ask. This means it can find more relevant answers, especially for complicated or detailed questions.

By making searches more like natural conversations, semantic search closes the gap between how we talk and how data is organized. It provides precise and useful results that fit exactly what someone is looking for, making searching with Manticore a lot better.

We are now working on bringing the auto-embeddings functionality to Manticore. This will allow for automatic conversion of text into vectors, making everything simpler and faster. Keep an eye on our progress here on GitHub . With these improvements, searching with Manticore is getting even better!