Recently we released Manticore 3.0.0 with lots of improvements including some new optimizations that improve performance. In this article we’d like to compare the new version’s performance with performance of Sphinx 3.1.1.

TL;DR

Manticore shows:

- about 2x higher search performance in some cases, especially with longer queries

- and lower, but still better performance in all the other tests

- except for the indexation time where Sphinx is 2% faster

Test environment

As previously when we benchmarked Manticore 2.7 vs Sphinx 3 we’ll benchmark that on the dataset of 11.6M user comments from Hacker News.

The benchmark was conducted with the following conditions:

- Hacker News curated comments dataset of 2016 in CSV format

- OS: Ubuntu 18.04.1 LTS (Bionic Beaver), kernel: 4.15.0-47-generic

- CPU: Intel(R) Core(TM) i7-3770 CPU @ 3.40GHz, 8 cores

- 32G RAM

- HDD

- Docker version 18.09.2

- Base image for indexing and searchd - Ubuntu:bionic

- Manticore Search was built in docker, Sphinx binaries were downloaded from the site since there’s no open source to build from

- stress-tester for benchmarking

The config is identical for Manticore and Sphinx:

source full

{

type = csvpipe

csvpipe_command = cat /root/hacker_news_comments.prepared.csv|grep -v line_number

csvpipe_attr_uint = story_id

csvpipe_attr_timestamp = story_time

csvpipe_field = story_text

csvpipe_field = story_author

csvpipe_attr_uint = comment_id

csvpipe_field = comment_text

csvpipe_field = comment_author

csvpipe_attr_uint = comment_ranking

csvpipe_attr_uint = author_comment_count

csvpipe_attr_uint = story_comment_count

}

index full

{

path = /root/idx_full

source = full

html_strip = 1

mlock = 1

}

searchd

{

listen = 9306:mysql41

query_log = /root/query.log

log = /root/searchd.log

pid_file = /root/searchd.pid

binlog_path =

qcache_max_bytes = 0

}

Indexation

Indexation took 1263 seconds for Manticore and 1237 seconds for Sphinx:

Manticore:

indexing index 'full'...

collected 11654429 docs, 6198.6 MB

creating lookup: 11654.4 Kdocs, 100.0% done

creating histograms: 11654.4 Kdocs, 100.0% done

sorted 1115.7 Mhits, 100.0% done

total 11654429 docs, 6198580642 bytes

total <b>1263.497 sec</b>, 4905890 bytes/sec, 9223.94 docs/sec

total 22924 reads, 1.484 sec, 238.4 kb/call avg, 0.0 msec/call avg

total 11687 writes, 11.773 sec, 855.1 kb/call avg, 1.0 msec/call avg

Sphinx:

indexing index 'full'...

collected 11654429 docs, 6198.6 MB

sorted 1115.7 Mhits, 100.0% done

total 11654429 docs, 6.199 Gb

total <b>1236.9</b> sec, 5.011 Mb/sec, 9422 docs/sec

So on this data set and index schema Manticore indexes slower than Sphinx by ~2%.

Performance tests

Both instances were warmed up before testing. The index files were as follows:

Manticore:

root@bench# ls -lah /var/lib/docker/volumes/64746c338de981014c7c1ea93d4c55f55e13de63ac9e49c2d31292bb239a82b6/_data

total 4.7G

drwx------ 2 root root 4.0K May 14 09:03 .

drwxr-xr-x 3 root root 4.0K May 14 09:01 ..

-rw-r--r-- 1 root root 362M May 13 17:22 idx_full.spa

-rw-r--r-- 1 root root 3.1G May 13 17:31 idx_full.spd

-rw-r--r-- 1 root root 90M May 13 17:31 idx_full.spe

-rw-r--r-- 1 root root 628 May 13 17:31 idx_full.sph

-rw-r--r-- 1 root root 29K May 13 17:22 idx_full.sphi

-rw-r--r-- 1 root root 6.5M May 13 17:31 idx_full.spi

-rw------- 1 root root 0 May 14 09:03 idx_full.spl

-rw-r--r-- 1 root root 1.4M May 13 17:22 idx_full.spm

-rw-r--r-- 1 root root 1.1G May 13 17:31 idx_full.spp

-rw-r--r-- 1 root root 59M May 13 17:22 idx_full.spt

Sphinx:

root@bench /var/lib/docker/volumes # ls -lah /var/lib/docker/volumes/bd28586b5102ff91d4c367f612e2f7b1fe0a066917c8e0b4636d203dd3ba5b0b/_data

total 4.6G

drwx------ 3 root root 4.0K May 14 09:04 .

drwxr-xr-x 3 root root 4.0K May 14 09:03 ..

-rw-r--r-- 1 root root 362M May 13 19:09 idx_full.spa

-rw-r--r-- 1 root root 3.1G May 13 19:17 idx_full.spd

-rw-r--r-- 1 root root 27M May 13 19:17 idx_full.spe

-rw-r--r-- 1 root root 648 May 13 19:17 idx_full.sph

-rw-r--r-- 1 root root 6.3M May 13 19:17 idx_full.spi

-rw-r--r-- 1 root root 8 May 13 19:09 idx_full.spj

-rw-r--r-- 1 root root 1.4M May 13 19:09 idx_full.spk

-rw------- 1 root root 0 May 14 09:04 idx_full.spl

-rw-r--r-- 1 root root 1.1G May 13 19:17 idx_full.spp

Test 1 - time to process top 1000 terms from the collection

First of all let’s just run a simple test - how much it takes to find documents containing top 1000 terms from the collection:

for n in `head -1000 hn_top.txt|awk '{print $1}'`; do

mysql -P9306 -hhn_$engine -e "select * from full where match('@(comment_text,story_text,comment_author,story_author) $n') limit 10 option max_matches=1000" > /dev/null

done

The results are: 77.61 seconds for Sphinx and 71.46 seconds for Manticore.

So in this test Manticore Search is faster than Sphinx Search by 8,59%.

Test 2 - top 1000 frequent terms from the collection broken down by groups (top 1-50, top 50-100 etc.)

Now let’s see how Sphinx and Manticore are different in processing terms from sub-groups of the group of top 1000 frequent terms.

To understand the queries better here’re some random query examples for each of the groups:

| 1-50 | 50-100 | 100-150 | 150-200 | 200-250 | 250-300 |

| about | get | thing | used | different | high |

| my | work | am | off | system | build |

| more | things | seems | sure | didn | next |

| 300-350 | 350-400 | 400-450 | 450-500 | 500-550 | 550-600 |

| days | worth | 20 | wanted | price | itself |

| write | server | care | sites | g | file |

| nothing | phone | story | recently | model | store |

| 600-650 | 650-700 | 700-750 | 750-800 | 800-850 | 850-900 |

| hiring | smart | welcome | choice | taken | topic |

| client | wish | wants | pg | details | core |

| iphone | reasons | environment | generally | css | wondering |

| 900-950 | 950-1000 | ||||

| complete | keywords | ||||

| resources | late | ||||

| thoughts | ipad |

Manticore is faster than Sphinx by 6,8% 95p latency-wise and 12.2% throughput-wise.

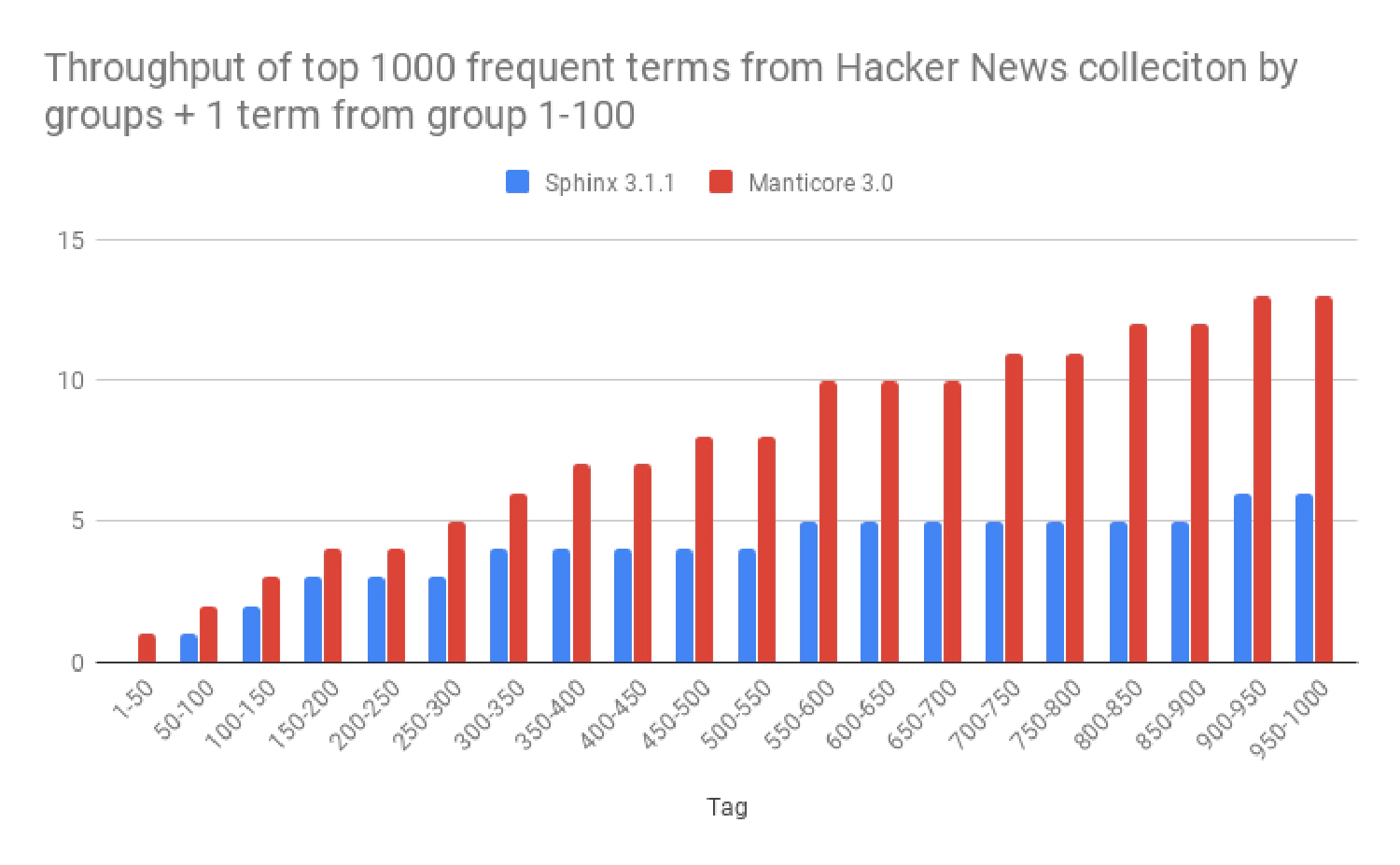

Test 3 - top 1000 frequent terms from the collection broken down by groups + 1 term from group 1-100

Let’s see how it works when you have one very frequent term and another less frequent. The examples are:

| 1-50 | 50-100 | 100-150 | 150-200 | 200-250 | 250-300 |

| more be | that been | way being | an off | just startup | had pay |

| them as | could other | can d | think own | really else | if design |

| about was | make then | was etc | from app | to little | on yet |

| 300-350 | 350-400 | 400-450 | 450-500 | 500-550 | 550-600 |

| just either | a internet | who net | you location | will program | t basically |

| not away | by social | t past | a sites | a several | has co |

| 1 write | has means | most guys | no matter | has space | if basically |

| 600-650 | 650-700 | 700-750 | 750-800 | 800-850 | 850-900 |

| way programmers | see title | because words | think mac | most expensive | time changed |

| was difficult | me successful | com customer | is heard | on extremely | been food |

| their weeks | than yc | because fine | also desktop | for understanding | most traffic |

| 900-950 | 950-1000 | ||||

| if party | really fairly | ||||

| when serious | here servers | ||||

| think speed | was lose |

Wow, Manticore is faster than Sphinx by 106% for throughput and 91,8% in average in terms of 95p latency.

Test 4 - top 1000 frequent terms from the collection broken down by groups + 1 term from group 1-100, both terms enclosed in quotes to make a phrase

| 1-50 | 50-100 | 100-150 | 150-200 | 200-250 | 250-300 |

| "they all" | "think also" | "that code" | "we app" | "from give" | "by user" |

| "http you" | "like been" | "is our" | "as article" | "use made" | "really least" |

| "by some" | "use work" | "don well" | "i app" | "could different" | "if understand" |

| 300-350 | 350-400 | 400-450 | 450-500 | 500-550 | 550-600 |

| "other developer" | "by building" | "want create" | "there front" | "any government" | "them consider" |

| "has ideas" | "i python" | "who given" | "has completely" | "has price" | "ve starting" |

| "way facebook" | "be edit" | "up link" | "use location" | "really deal" | "now early" |

| 600-650 | 650-700 | 700-750 | 750-800 | 800-850 | 850-900 |

| "been weeks" | "up engineering" | "has asking" | "s p" | "who css" | "there plus" |

| "make api" | "we expect" | "really willing" | "t step" | "because strong" | "for traffic" |

| "was note" | "would themselves" | "http degree" | "http desktop" | "not otherwise" | "much food" |

| 900-950 | 950-1000 | ||||

| "s bring" | "very require" | ||||

| "this choose" | "all examples" | ||||

| "now bring" | "be argument" |

Here Manticore is in average faster: by 11,8% for throughput and 21,2% for 95p latency.

Test 5 - 2 terms each from group 600-750 under different concurrencies

This test aims to show the difference in throughput under different query concurrencies. Here’s what we get:

Manticore is faster under all the concurrencies by average 31% and gives throughput with 95p latency lower by 28%.

Тest 6 - 3-5 terms from different groups

This test aims to show the difference in search by longer queries (3-5 terms):

- 3 terms from groups 100-200 400-500 800-900 correspondingly

- 4 terms from groups 100-200 300-400 500-600 800-900 correspondingly

- 5 terms from groups 100-200 300-400 500-600 800-900 900-1000 correspondingly

Query examples:

| 3 terms | 4 terms | 5 terms |

| job self release | using thanks second 12 | switch started b 12 places |

| every sites missing | around school model links | switch github class hate recent |

| his aren avoid | through jobs amount effect | switch facebook office absolutely english |

Manticore’s throughput is 77.6% higher and the 95p latency is 81.4% lower.

TEST 7: 3 AND terms from groups 300-600 and 1 NOT from 300-400

In this test we add a NOT term to a 3-term query:

Throughput - 66.6% higher, 95p latency - 57% lower.

Conclusions

Sphinx shows few seconds better indexation performance on a 21 minutes indexation.

As for the search performance which as we think is much more important Manticore 3.0.0 demonstrates much higher throughput and lower latency in all the tests. The whole test is fully dockerized and open sourced in our github. The detailed results can be found here. We’ll appreciate if you run the same tests on your hardware or add different tests to the suite and let us know the results.

If you’re thinking of migrating to Manticore 3 please read this article. We understand that your indexes may be big and to ease the migration process there’s a new tool index_converter which can easily convert your existing Sphinx 2 / Manticore 2 indexes to new Manticore 3 index format.

In case you have any issue, question or comments feel free to contact us:

- on twitter

- by sending an email to [email protected]

- posting on our Forum

- chatting with us in our Community Slack

- complaining on how bad is everything on our bug tracker in GitHub